Among the main limitations of current LLM models (Large Language Models), used for generate text starting from the inputs provided, there is the problem of hallucinations and the fact that artificial intelligence often struggles to provide up-to-date and current information. In general, i generative models they have no visibility on the events that have recently occurred and therefore have no awareness of current news.

The Perplexity chatbot is one of the most popular of all: compared to ChatGPTdemonstrates that he has a greater “awareness” of the freshest content published online and can therefore answer questions that have to do with events and news not too far back in time.

pplx-7b-online and pplx-70b-online models make AI more current aware

With the presentation of the new models pplx-7b-online e pplx-70b-onlinePerplexity radically modifies the concept of a generative model incapable of providing current and updated answers.

Both models are built starting from the base of mistral-7b and llama2-70b: we have spoken extensively about both in the pages of how2do.org. The search, indexing and infrastructure crawling developed internally by the software engineers of Perplexity allows you to enrich models with relevant and updated information. So much so that the brand new ones pplx-7b-online e pplx-70b-online I am precisely able to use them effectively information extracted from the network.

How Perplexity models work that also know the latest facts

Three main criteria are used to evaluate model performance at Perplexity:

- Helpfulness (Utility): Which answer best meets the question and follows the user-specified instructions?

- Factuality (Fact): Which answer provides accurate information without the presence of hallucinations?

- Freshness (Update): Which answer contains more up-to-date information?

The new models just unveiled by Perplexity outperform OpenAI GPT-3.5 and llama2-70b in all three of the aforementioned criteria, using objective evaluations specific to humans.

Of course, some underlying problems remain which, however, will tend to be overcome with the increasingly intensive use of the models themselves. It happened to us, for example, that the new Perplexity models did not report (at first attempt) a recent sports result, that they provided silly information or that they misinterpreted rankings. Furthermore, when using Europen, the model occasionally tends to return grammatical errors or formulate unconvincing sentence constructions.

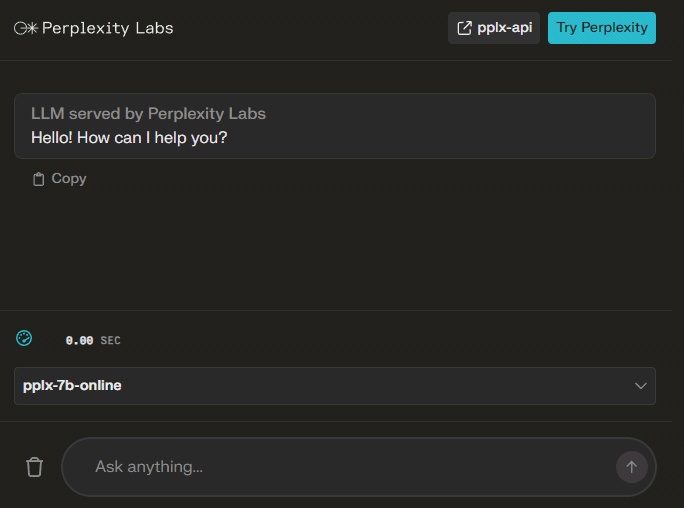

How to test the new models

If you also want to try the new generative models, simply refer to the Perplexity Labs Playground. The drop-down menu at the bottom right allows you to select the LLM you prefer to use: those with the suffix “online” in the name are obviously designed to have greater visibility on recent content, including news events. The references “7b” and “70b” indicate the number of billions of parameters used by Perplexity in the training phase.

Once entered in the field Ask anything the description of what you want to achieve, the models immediately begin theinput processing also providing some statistical data such as the number of tokens used, the time needed for the computing (expressed in tokens per second) and the duration of the operation.

Availability of APIs for integration into your own projects

The new pplx-api, made available by Perplexity, allow you to connect generative models with your own applications. Developers can therefore make use of the appropriate ones API (Application Programming Interfaces) to enrich your applications with advanced features based on artificial intelligence.

With pplx-api the programmer can also access the templates pplx-7b-online e pplx-70b-online to generate answers to specific questions. Thanks to the “online” nature of these models, the API allows you to exploit updated knowledge acquired by the network to respond in a more precise and timely manner.

Plan users Perplexity Pro can benefit from $5 in free credit every month, useful for testing the potential of the APIs and models. The price of the actual fee, however, varies depending on the volume of data processed: it starts from 7 and 28 cents, respectively in input and output, for 1 million tokens in the case of the lightest model with 7 billion parameters.

In the case of the most recent “online” models, however, Perplexity does not apply any costs for the management of input tokens while asking 5 dollars for every 1,000 requests and 28 cents for 1 million output tokens produced.

Opening image credit: iStock.com/da-kuk