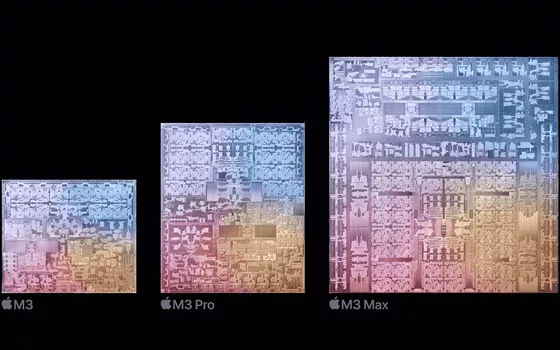

Apple presented the first devices based on chip M3 on October 31, 2023. The family of the latest SoCs Apple Silicon includes the M3, M3 Pro and M3 Max models, which represent an enhanced version of the M2 chip, with more transistors, a renewed architecture and optimizations on memory use.

During the event Scary Fast at the end of last October, Apple also presented the new one MacBook Pro with M3 chip, which offers an ultra-performance GPU, a powerful CPU and support for up to 128 GB of memory. The technicians of the company led by Tim Cook also placed emphasis on the new iMac 24″: Also built around the M3 chip, which offers up to 2x better performance than the previous generation model with the M1 chip.

But what are the main ones? differences between M3 chips and the SoCs of previous generations, M1 and M2?

Apple Silicon: differences between M3 chips and M1/M2 SoCs

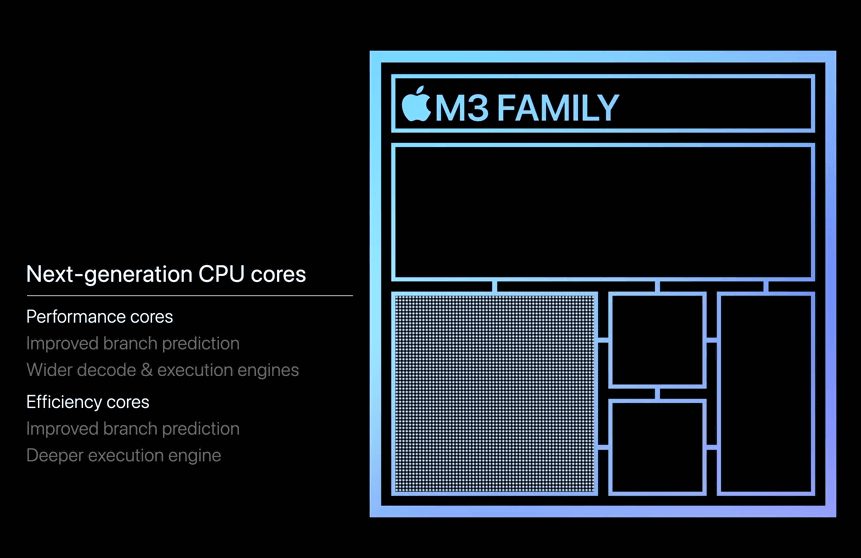

One of the most obvious differences between the Apple M3 SoCs and previous generation ones lies in the cluster size. While the M1 and M2 chips organize the CPU cores into clusters of 2 or 4 units, the M3 chips (especially the M3 Pro and M3 Max) feature clusters of 4 or even 6 core. This choice brings with it a series of evaluations to be made when choosing the chip best suited to the specific needs of each user. There thread management on the part of macOS, depending on the priority, becomes crucial and influences both performance and energy consumption.

The technicians of “The Eclectic Light Company” they develop some solutions, such as software Viable, which allow you to run virtual machines on macOS Apple Silicon. This time the experts focused on an initial analysis of the behavior and changes made to the M3 chips by Apple engineers.

Core E: frequencies and priorities

The cores And in the M3 Pro show improvements in terms of maximum frequency, reaching 2748 MHz compared to the 2064 MHz of the M1s. However, frequency management when running low priority threads appears different: the E cores of the M3 Pro work at 744 MHz, a significantly lower frequency than the 972 MHz of the M1 Pro. The priority of the threads therefore becomes a determining factor in performance: the E cores, in fact, manage the tasks considered at high priority at their maximum frequency.

Overall, the E cores of the M3 appear similar to those of the M1, but have a higher maximum frequency and run at a lower clock speed for background activity.

Core P: Higher frequencies and performance improvements

The differences in core P are evident, with the M3 Pro reaching a maximum frequency of 4056 MHz compared to the 3228 MHz of the M1. This translates into significant improvements in computations involving integers and floating point numbers. The leap forward in terms of performance can be recognized, in particular, in the vector operations using NEON or the library Accelerate of Apple.

NEON is a instruction set SIMD type (Single Instruction, Multiple Data) designed by ARM, the architecture on which Apple Silicon chips are based. SIMD instructions allow you to perform the same operation on multiple data simultaneously, greatly improving performance in data-intensive operations, such as those involved in vector computing and graphics processing.

The improved NEON drive design found in the P cores of the M3 chips is the engine that optimizes vector computing performance and the operation of multimedia applications (very useful for accelerating operations such as image processing, 3D graphics and video decoding ).

The Accelerate library from Apple provides a set of highly optimized functions to perform complex and calculation-intensive operations on numerical data. These functions are written in Assembly language and highly optimized to take full advantage of the hardware capabilities of Apple chips.

The performance of the P and E cores in handling high workloads

Keeping aside the clear improvements in vector processing that characterize the M3 cores, the new chips show different performance patterns when under load.

To verify performance, “The Eclectic Light Company” used the AsmAttic utility that runs a series of loops that perform floating-point calculations, accessing only registers and not memory. Each thread executes 200 million cycles of assembly code, and each core is 100% stressed.

Throughput dei core P

Examining the first graph shown on this page, we observe the following:

- For the M1 Pro (red line) the behavior is almost perfectly linear, with each thread completely occupying a core for a period of 1.3 seconds.

- In the case of the M3 Pro (black line) the first 6 threads are executed on the P cores, then on an increasing number of E cores. The relationship is quite linear up to 6 threads, taking significantly less time than on the M1 Pro.

From 6 to 8 threads, the two lines move in parallel, indicating that the M3 Pro’s E cores offer similar performance to the M1 Pro’s P cores.

E core throughput

The next graph is for running low priority threads on the E cores, comparing the M1 Pro’s 2 E cores to the M3 Pro’s 6 E cores:

- For the M1 Pro (red line), the frequency of the E cores increases when running a second thread, explaining the slight variation in total time from 1 to 2 threads. However, with more than 2 threads, subsequent threads are queued and performance suffers.

- For the M3 Pro (black line), the 6 E cores have triple the capacity for handling background threads. Although they run slower, they handle up to 6 threads. Above this number, threads are automatically queued and the time needed to complete them increases more quickly.

Conclusions

The analysis highlights distinct patterns in the performance of the P and E cores, highlighted during load tests with applications such as AsmAttic. The M3 Pros show a noticeable improvement in performance when multiple threads are running, leveraging a combination of P and E cores more efficiently than the M1s.

In conclusion, the transition to M3 chips represents a significant step in the evolution of the Apple Silicon line. Differences in cluster sizes, core frequencies, and design improvements result in an improved user experience. However, the actual impact on real-world scenarios will require further analysis and testing.

Looking ahead, it will be interesting to see how Apple further optimizes hardware and software integration to deliver even more impressive performance.