Among the many LLMs (large linguistic models) presented in the last period, among those that stand out most clearly is certainly OpenChat. This is a tool presented in November 2023 that “only” counts 7 billion parameters (7B) but which manages to outperform ChatGPT (OpenAI) in multiple reference benchmarks.

What OpenChat is and how it works

OpenChat is an innovative library that integrates open source language modelssubjected to careful optimization activity (fine tuning).

C-RLFT (Conditioned Reinforcement Learning Fine-tuning) is a strategy used by OpenChat in the process of training of language models that is inspired by offline reinforced learning. L’reinforced learning it is a technique used to train artificial intelligence through interaction with the environment, receiving feedback in the form of rewards or “punishments”. The indication offline refers to the fact that training does not take place in real time, but uses previously collected data.

In the case of OpenChat, offline reinforcement learning is used for improve responses through the evaluation of past conversations and the consequent updating of the model.

Using OpenChat you can get performance comparable with those of ChatGPT, even using a “consumer” GPU (for example an NVidia Geforce RTX 3090).

Main characteristics of the model

- Model dimensions and performance: the 7 billion parameter model (OpenChat-3.5-7B) which achieved comparable results with ChatGPT on several benchmarks. OpenChat-3.5 has achieved impressive scores on various benchmarksurpassing other open source models, such as OpenHermes 2.5 e OpenOrca Mistralits metrics like MT-Bench, AGIEval, BBH MC, TruthfulQA, MMLU, HumanEval, BBH CoT and GSM8K.

- Frequent updates: OpenChat regularly releases new versions and updates. For example, the OpenChat-3.5-7B model was released recently on November 1, 2023.

- License and accessibility: A tool like OpenChat is distributed under the Apache-2.0 license, making it open source and accessible. Users can install it using

pipoconda. - API server: OpenChat offers an API ready to use in production environments which, among other things, is fully compatible with the protocol OpenAI API. The software architecture allows, among other things, to dynamically process incoming requests in blocks (batches).

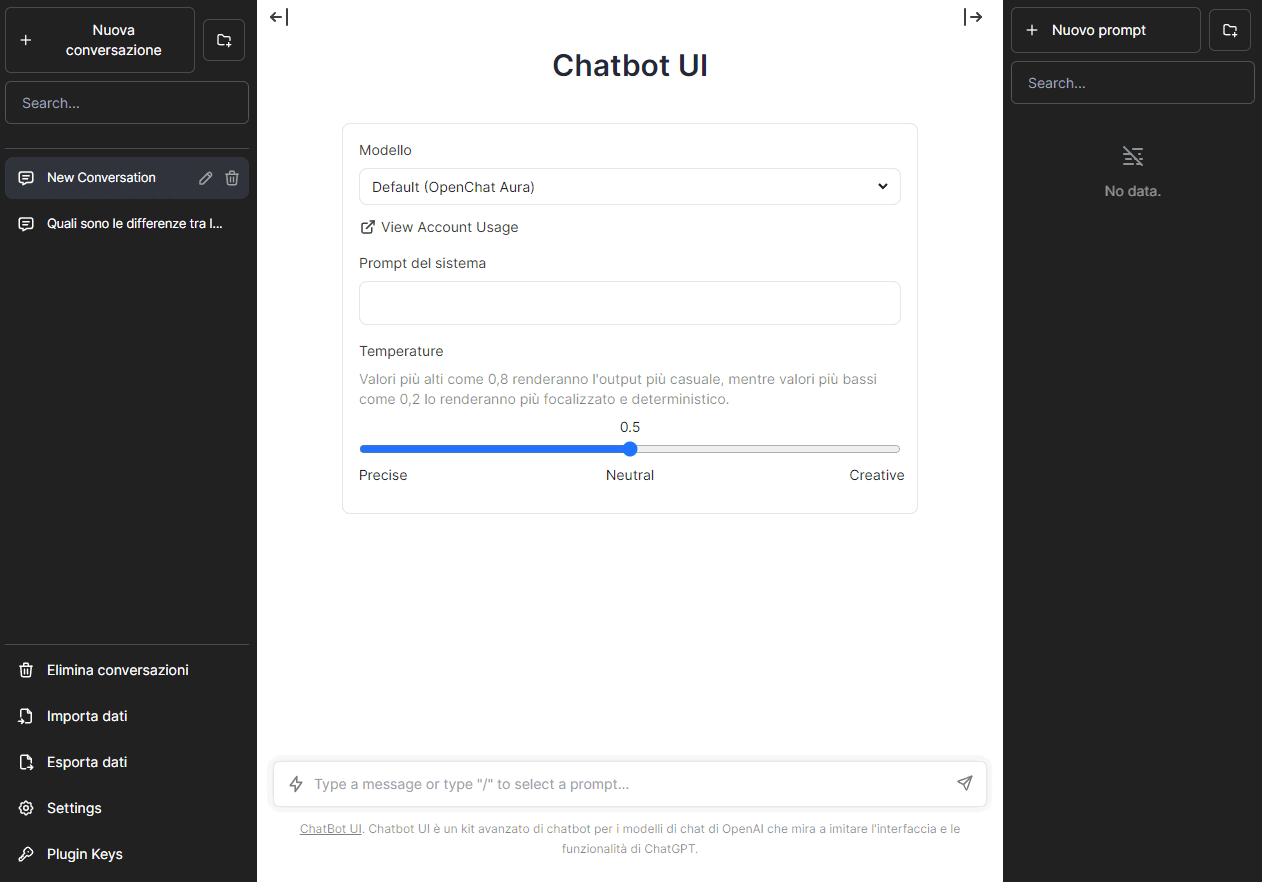

- Web user interface (Web UI): Alongside APIs to facilitate dialogue with client devices, OpenChat provides a Web UI that simplifies interaction with the model.

How to try OpenChat

The developers of OpenChat have made a free online demo available that allows you to send a series of prompt to the generative model and evaluate the answers provided.

On the main page of chatbot open source, conversations and prompts can be created. The system is able to keep track of the information transferred to it during the same conversation.

You can also change the values of Temperature in order to make the output more random and “creative” (higher values) or, vice versa, more focused and precise (lower values).

By clicking on Settingsin the left column, you can also specify a him different and opt for the one with a light background.

Conversations can be exported and imported, deleted and managed as you see fit.

To install e use OpenChat locallythe simplest solution is to use Ollama by loading the framework inside a Docker container:

docker exec -it ollama ollama run openchat

More information is available on the official OpenChat GitHub repository.

Training and customization

The linguistic model underlying the operation of OpenChat can also learn from the conversations provided as input, adapting its behavior and responses to the data made available. Following training activities (training) allow you to adapt the model to the specific needs of the user and the context.

Before you start training, you need to select a base model to train on fine tuning. OpenChat supports templates like Llama 2 and Mistral, each with specific dimensions and characteristics.

Training data is obviously essential. In OpenChat, conversations are represented in the form of JSON objects: each line corresponds to an object “Conversation” containing messages of “user” e “assistant” with related labels and weights.

The dataset you want to “feed” to OpenChat must be pre-tokenized. This process involves converting data into a tokenized format using a specific model. This is an important step to speed up the training phase.

The actual training involves theparameter optimization of the model: OpenChat uses DeepSpeedvariant of PyTorch for distributed training on GPU-accelerated hardware. During training, they are generated checkpoint of the model at regular intervals. These are different “states” of the generative model: At the end of training, it is possible evaluate i checkpoint to choose the version of the model that you consider best.

Opening image credit: iStock.com/Shutthiphong Chandaeng