One of the most popular and used generative artificial intelligence models, capable of producing photorealistic images, is Stable Diffusion. It differs from others image generation models because it significantly reduces processing requirements: it can run on desktop with an NVidia GPU based on 8 GB of RAM (VRAM).

To use Stable Diffusion, you must provide a prompt that is, the description of the image, scene or object you wish to obtain. The quality of the generated image depends, in turn, on the quality of the prompt specified as input.

Il source code and the model weights have been released publicly; also, the license Creative ML OpenRAIL-M allows you to use, modify and redistribute the modified software. The developer who creates derivative software starting from Stable Diffusion must share it with it license and include a copy of the original Stable Diffusion license.

During the training phase of Stable Diffusion, a huge set of reference images was used. By adding noise in a series of subsequent steps, you can make the model use a reverse diffusion process to recreate the image.

In generative models, the “latent space” is a lower-dimensional abstraction than the actual image feature space. It is a way of representing abstract concepts or relevant features in a more compact format. This is why, thanks to the use of a reduced latent space, Stable Diffusion is able to combine excellent results with an overall modest impact on the hardware.

Stable Diffusion WebUI Forge: how to turbocharge Stable Diffusion

Today there are a large number of interfaces for Stable Diffusion developed by independent developers, all over the world. Among the most promising, which we invite you to try, there is certainly Stable Diffusion WebUI Forge.

It is an innovative interface created by the same author of ControlNet that allows you to create images and process them much faster than more traditional approaches, while always leveraging the Stable Diffusion model.

For Windows users with a system based on GPU NVidia with at least 8 GB of RAM, the advice is to visit this page on GitHub then click on the link “Click Here to Download One-Click Package“.

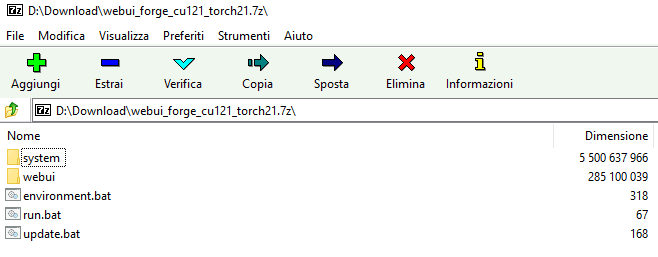

At the end of downloadwhich can take some time (the compressed archive weighs about 1.7 GB), you get a file in format .7z to be opened with the 7-Zip utility. Its contents must be extracted to a local folder, for example c:\sd. For the operation, we suggest using the 7-Zip program anyway because at the time of writing this article, the performance guaranteed by Windows 11 for file management .7z they are certainly inferior.

The next step is to double-click the update.bat file for update Stable Diffusion WebUI Forge to the latest version completely automatically.

Launch the interface and use it from your web browser

At any time, to start using Stable Diffusion WebUI Forge, you need to double-click the file c:\sd\run.bat. For the first time only, there is an extra step of downloading and installing some additional software components.

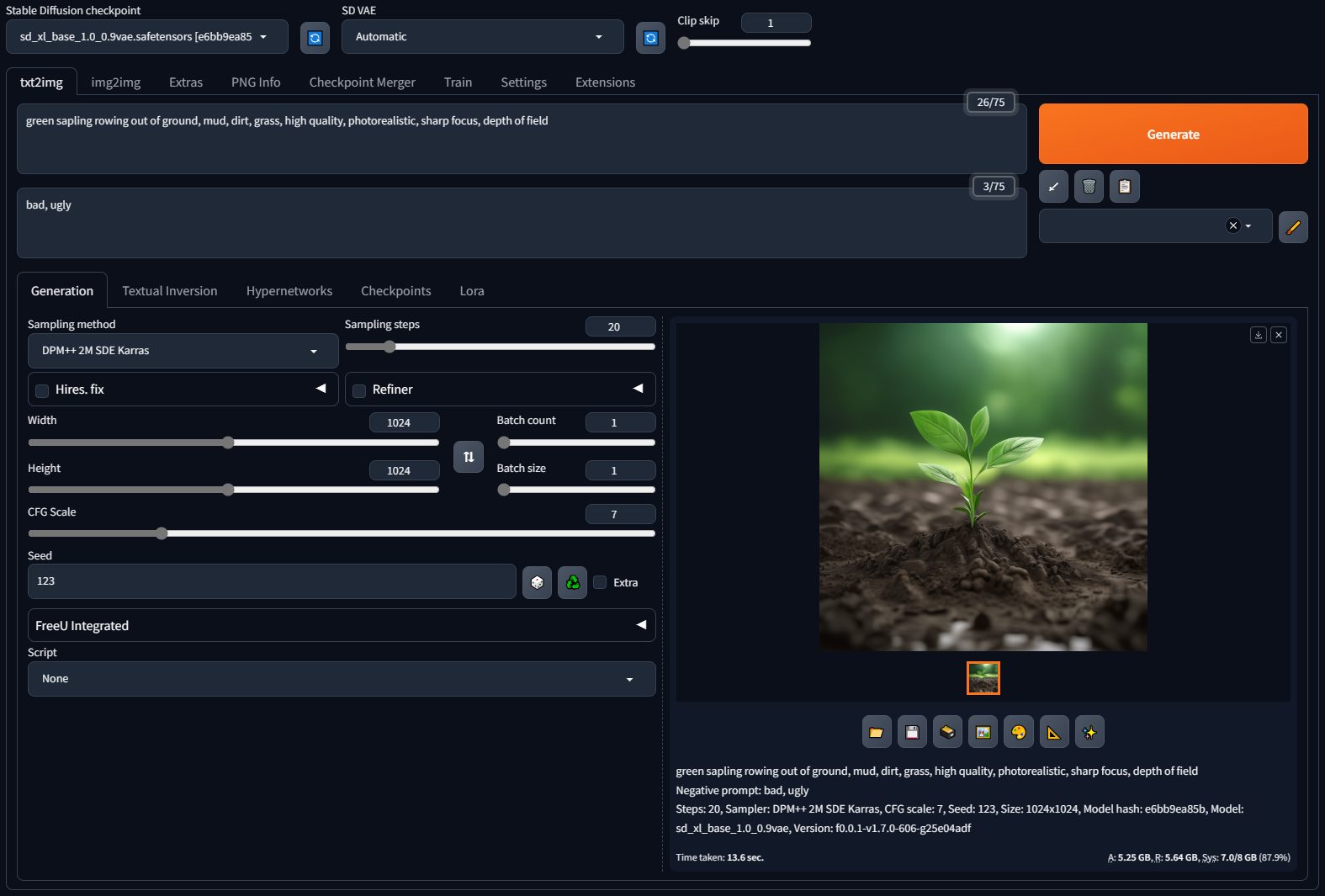

The Stable Diffusion WebUI Forge package actually installs a server Web local that responds to the localhost address, on the indicated port. The page that appears in the web browser configured as default on the system in use allows you to configure in detail the type of image you want to obtain.

To carry out a first testjust click on the tab txt2img (which stands for “text to image”), enter a description in the box Prompt trying to be as clear and precise as possible in describing what you want to generate. The button Generate allows you to request the image generation starting from the input provided.

By comparing the speed results of Stable Diffusion WebUI Forge compared to the original implementation, images can be obtained by reducing the generation times up to 75%, depending on the configuration in use.

Adding more templates is very simple

Using other models in combination with Stable Diffusion can be very useful. Stable Diffusion alone may not be sufficient for certain tasks or for obtaining complex images.

A project like Stable Diffusion WebUI Forge allows you to extend image generation abilities and allow greater flexibility in output. For example, using specialized text-image models, in combination with Stable Diffusion, allows you to generate photorealistic images starting from textual descriptions, enormously expanding the creative possibilities.

SDXL, also published by Stability AIit can for example be downloaded from this page and then saved in the folder c:\sd\webui\models\Stable-diffusion. Right where there is a text file named Put Stable Diffusion checkpoints here.txt.

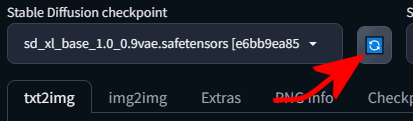

Restarting Stable Diffusion WebUI Forge, with a click on the drop-down menu Stable Diffusion checkpointyou can choose the additional model (in this case SDXL 1.0) and then click on the button on the right which allows you to request its actual loading.

What was impossible with the original implementation, with Stable Diffusion WebUI Forge also becomes possible to use additionally ControlNeta neural network structure designed to control diffusion patterns by adding extra conditions.

ControlNet connects with Stable Diffusion by providing a mechanism to influence and control the generation process of the images. By integrating ControlNet with Stable Diffusion, users can introduce additional or specific conditions to drive image generation in a more precise and customized way. Take a look at the practical examples available in these paragraphs.

Opening image credit: iStock.com – BlackJack3D