In the panorama of computer graphics, the real-time synthesis of dynamic three-dimensional scenes in 4K resolution is an ambitious and complex goal. Recently, some methods have shown truly impressive rendering quality. One of the main obstacles, however, is limited rendering speed when working with high resolution images. To overcome this challenge, a team of researchers has developed an innovative solution called 4K4D: let’s explain what it is.

By real-time synthesis of three-dimensional images we refer to the process of generating and displaying 3D images starting from a flow in turn acquired in realtime. Think of a “live” video or the reproduction of multimedia content.

4K4D: what it is and how the technology that allows you to produce dynamic views of 3D scenes in 4K works

The main goal of 4K4D is the high-fidelity real-time synthesis of dynamic views of 3D scenes at 4K resolution. The heart of 4K4D is a new representation mode called “4D point cloud“: it is based on a grid of caracteristics 4Dproviding an organized structure that facilitates optimization of points.

The “points” to which the researchers refer are used in the representation of images to position and carry out the rendering of a surface or object in the three-dimensional space. In the context of 4K4D, these points are part of the representation of three-dimensional scene. Each point can have properties such as position, radius and density, which are used for model the geometry of the scene.

The official website of the project briefly illustrates its aims and the results achieved. The in-depth study can be consulted by clicking here.

Why are we talking about 4D if the technology offers computerized synthesis in three dimensions?

In the acronym 4K4D, the term “4D” refers to the fourth dimension. It adds a temporal element to the three-dimensional representation of a scene.

The spatial coordinates (length, width, height) define the three-dimensional structure of an object or scene. However, when you add the temporal dimension (the fourth dimension, in fact), it is possible to capture and represent movement and changes in the scene over time. In the context of 4K4D, 4D representation involves the synthesis of dynamic views of 3D scenes in real time, allowing you to capture and represent not only the three-dimensional structure, but also the dynamics over time.

How 4K4D technology works

To make one computerized synthesis of a high-quality scene, the process on which 4K4D is based begins with the application of the “space-carving“. This algorithm extracts an initial sequence of point clouds from the scene being processed. The model obtained is then reused to represent the geometry and the look of the scene.

To define the complex 4K4D representation starting from video RGB multi-viewthe researchers used an algorithm of “depth peeling” differentiable (later we explain what this means). The approach allows a learning effective gods scene detailsallowing 4K4D to dynamically adapt to changes in view and lighting conditions.

Il depth peeling is a technique used to extract multiple layers of information from the scene. If you have overlapping transparent objects, the depth peeling allows you to separate them into distinct layers based on their depth. There differentiability it is a property that indicates the possibility of calculating derivatives with respect to independent variables. In this context, make the algorithm depth peeling differentiable means allowing the calculation of derivatives with respect to model parameters or the characteristics of the scene.

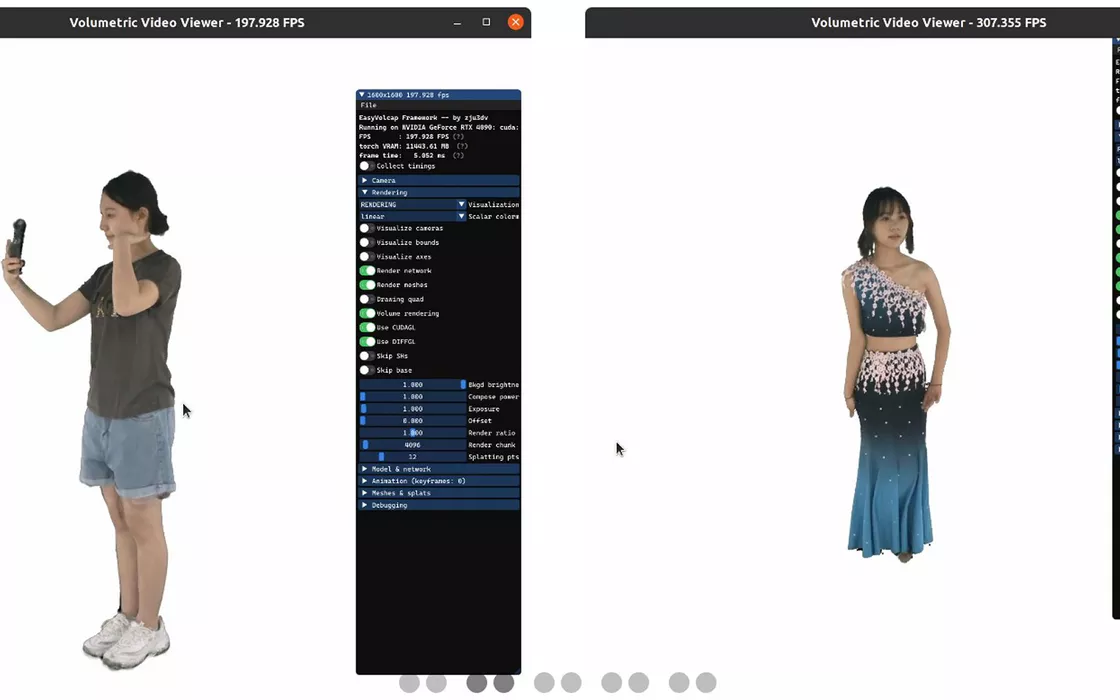

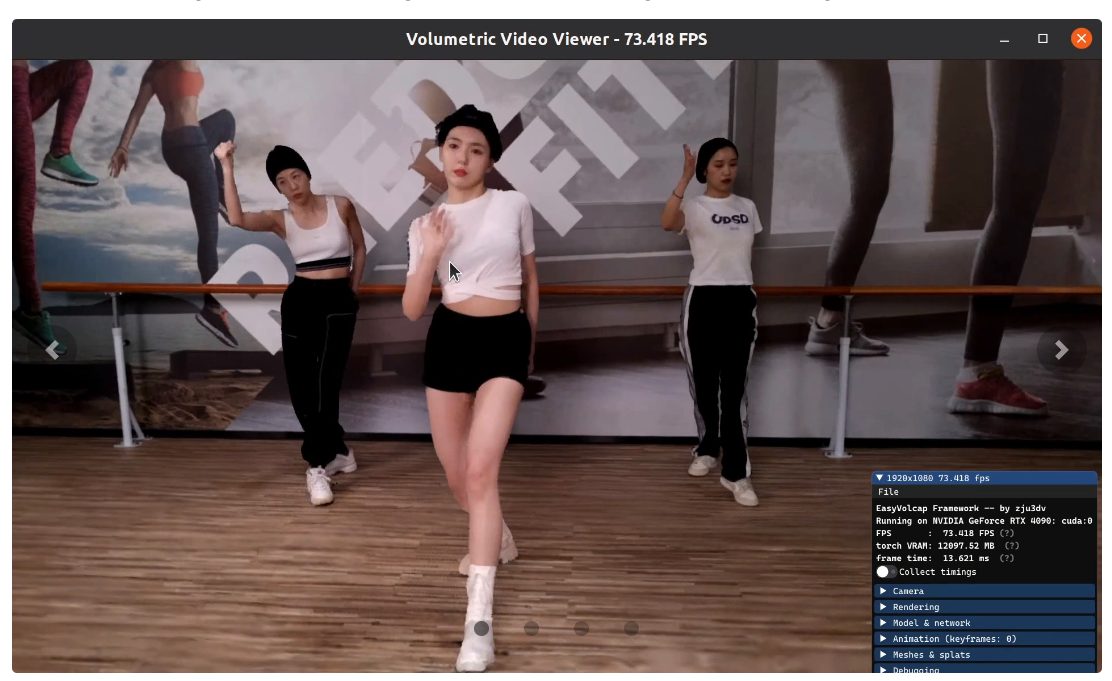

Unmatched performance: up to 80 fps on a 4K dataset, over 400 fps on Full HD 1080p images

Optimization techniques that require the calculation of derivatives are referred to as methods of optimization used to train or adjust the parameters of a model so that it better fits the training data. These methods exploit the derivatives of loss functions against the model parameters to update the parameters iteratively during the training process. When training a model, a loss function is defined that measures the discrepancy between the model’s predictions and desired values. The calculation of derivatives indicates how much the loss function changes with respect to each model parameter. The derivatives provide guidance on how to update the parameters to reduce the loss.

The result is impressive: the experiments conducted so far have highlighted that the 4K4D representation can be rendered at over 400 frames per second (fps) on a specific dataset at a resolution of 1080p and at 80 fps on another dataset 4Kusing a GPU RTX 4090. This represents a significant leap over previous methods, with an increase in rendering speed by 30 times and an overall quality that has few comparisons.

The team that worked on 4K4D promises to share on the soon repository Official GitHub everything source code useful for reproducing what has been achieved, with the possibility of implementing the technology in your own applications. The idea is in fact to share 4K4D technology so that anyone can benefit from it and use it to develop new innovative projects.

The images in the article are taken from the material published on the official website of the 4K4D project.