The term artificial intelligence is now on everyone’s lips and on the pages of how2do.org we have tried to follow the main developments closely. We talked for example about Ollama, an open project that acts as a collector and manager of open source generative models, allowing it to be used from the terminal window. For those who are unfamiliar with the command line, more recently we have uncovered some graphical interface projects for LLMs (Large Language Models).

Jan it is a tool created with the aim of democratize AI: the software comes in the form of an application with a graphical interface, compatible with Windows, macOS and Linux systems. The application sweeps away the technical complexity associated with implementing AI solutions and transforms your computer into a system ready to run leading AI models.

Rethink your computer and turn it into an AI-powered machine with Jan

If you are looking for a tool capable of making the most of the main open source generative models (and possibly communicating with commercial products such as OpenAI GPT 3.5 and GPT 4) you need not look elsewhere: Jan, downloadable from here, is suitable for everyone and it can be used after installation, with a simple click.

Proposed as an independent application, easy to use, aesthetically beautiful, Jan can be used without limitations and without paying a cent. THE advantages There are many by Jan but, to begin with, we want to highlight essentially three of them:

- The program automatically takes advantage of theGPU acceleration (especially if there is a dedicated card) and is compatible with NVIDIA, AMD, Intel and Apple solutions. It can also work in “CPU only” mode.

- Drawing on theHub just a click away, you can choose which LLM or LLMs to use. In the case of open source templates, these are automatically saved in the Jan folder (subfolder

models). In Windows, it is usually found in the path%userprofile%\jan\models. - Jan enables the use of asovereign artificial intelligence in the sense that the application works 100% locally, without the need for an Internet connection (only necessary to download the various models of interest). The data always remains on the user’s computer without ever being exchanged with any remote server.

How to use Jan

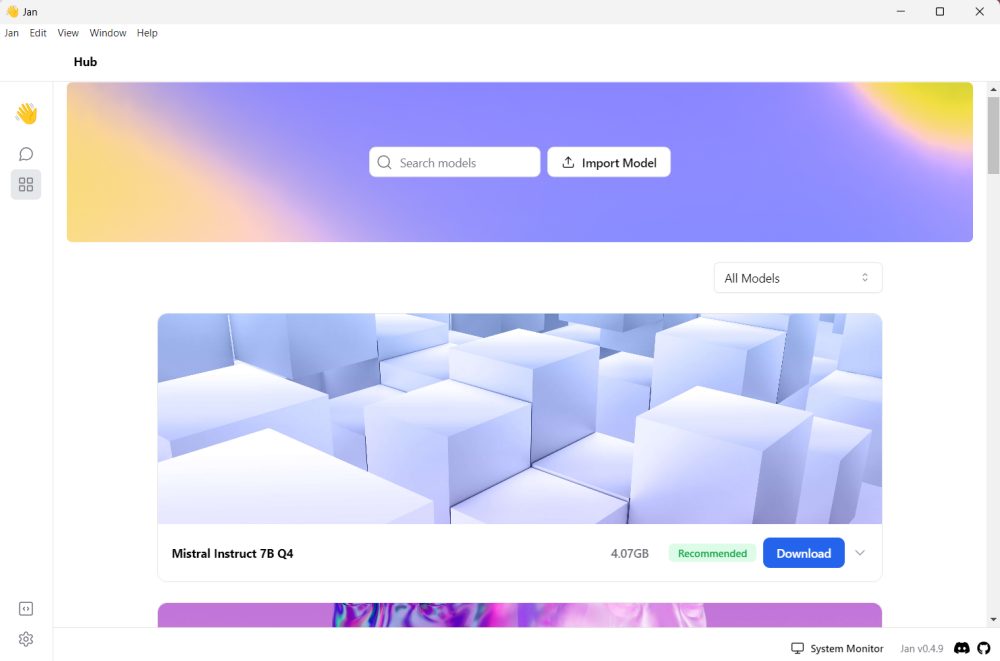

The first step after installing Jan is to click on the icon Hub, located in the left column. Here you can scroll through the endless list of LLM directly supported by the application.

Jan checks the compatibility of each model with the system in use and, for example, does not recommend installation when the amount of RAM or free space is not sufficient.

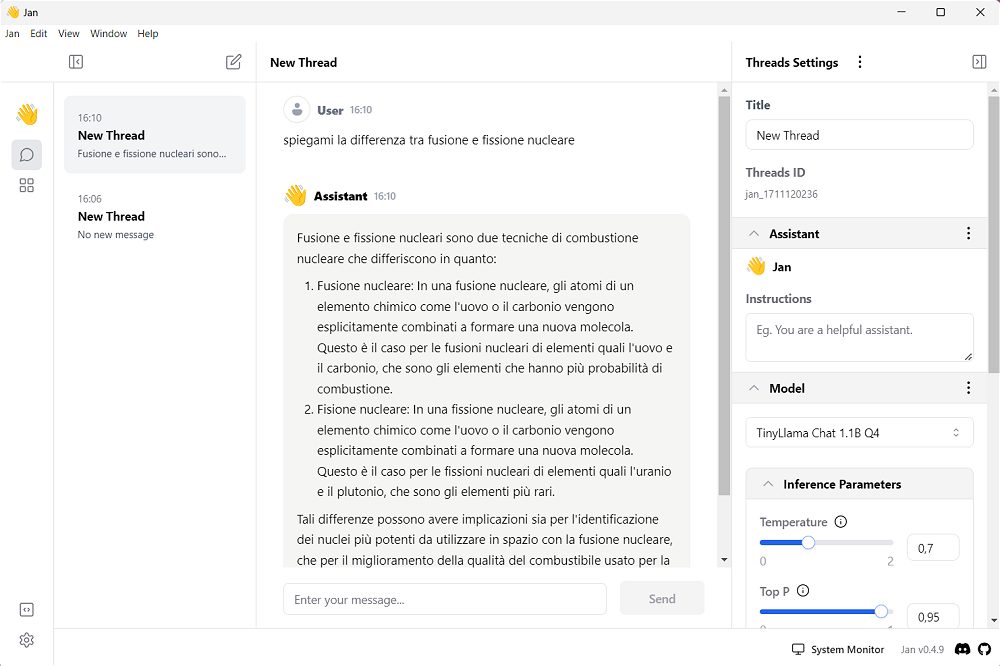

The button Download it allows to download the template and store it locally, in the Jan folder. Once this operation is completed, you can click on the icon Thread to start talking with the LLM. The field at the top right Title, can be used to assign an identifying label to the conversation. Furthermore, by acting on the box Instructionsvery precise instructions can be given, “rules” that the model must follow when providing answers and, in general, when interacting with the user.

A little further down, you will find all the inference parameters: these are the variables and factors that influence the model’s inference process, i.e. the deduction of new information or the proposition of conclusions based on information already known or on available data.

Users can also control the levels of censorship and use Jan in a variety of settings, from the conference room to any environment in your home. You can also extend Jan’s skills by using other models available on HuggingFace or upload customized templates.

Artificial intelligence that works in private and offline mode

We said that all LLMs (unless you specify, for example, keys for using OpenAI GPT models) supported by Jan work locally, guaranteeing maximum privacy. Conversations are therefore absolutely private and data is saved at file system level in a transparent and open data format.

Jan is also open source, allowing users to examine every line of the source code to ensure the security and privacy of their data.

Perfect for developers, thanks to OpenAI-style APIs

Jan is definitely aimed at developersgiving them the ability to easily integrate a locally running LLM into their projects.

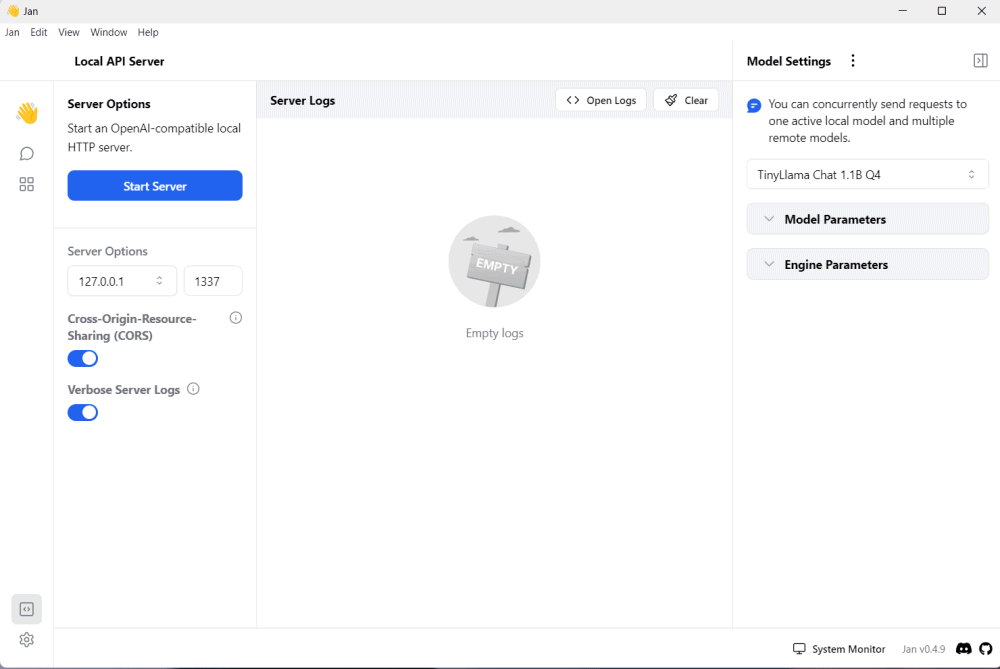

You can activate the local server mode to start building concrete projects using an API (Application Programming Interface) that does not differ at all from the approach used by OpenAI. With the difference that in Jan’s case it is not necessary to pay anyone a penny for using the model.

In particular, Jan Desktop includes a developer console and an intuitive user interface that makes it easy to view logs, configure templates, and more.

To get started, you can click on the icon Local API server shown in Jan’s interface at the bottom left. As can be seen in the image reproduced in the figure, Jan indicates the port on which it listens (by default it is TCP 1337 but it is possible to customize it). Once clicked on Start Serverhowever, the program will stop responding to prompt of the user via a graphical interface, proceeding only via API.

For those who like to experiment, Jan offers the possibility to customize the appearance and application features through themes, assistants and templates, without the need to write code. The application can be completely customized through the use of special tools extensionsinspired by the concept introduced by Microsoft with Visual Studio Code.

Opening image credit: iStock.com – da-kuk