Only a few days have passed since the presentation of the latest generation Gemini model that Google seems to want to shake up the market with another novelty. Of course, we are still talking about a study carried out by a group of researchers from the Mountain View company with the collaboration of a team of scholars from the universities of Stanford and the Georgia Institute of Technology. However, Google shows that it already has the technology for create videos from a text description.

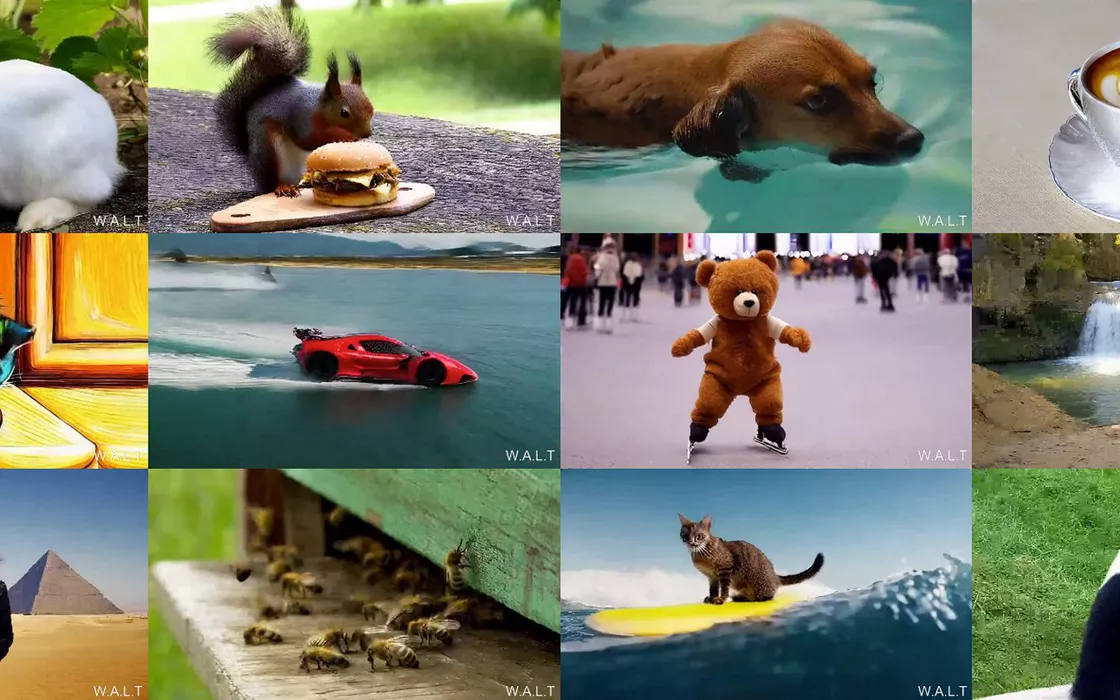

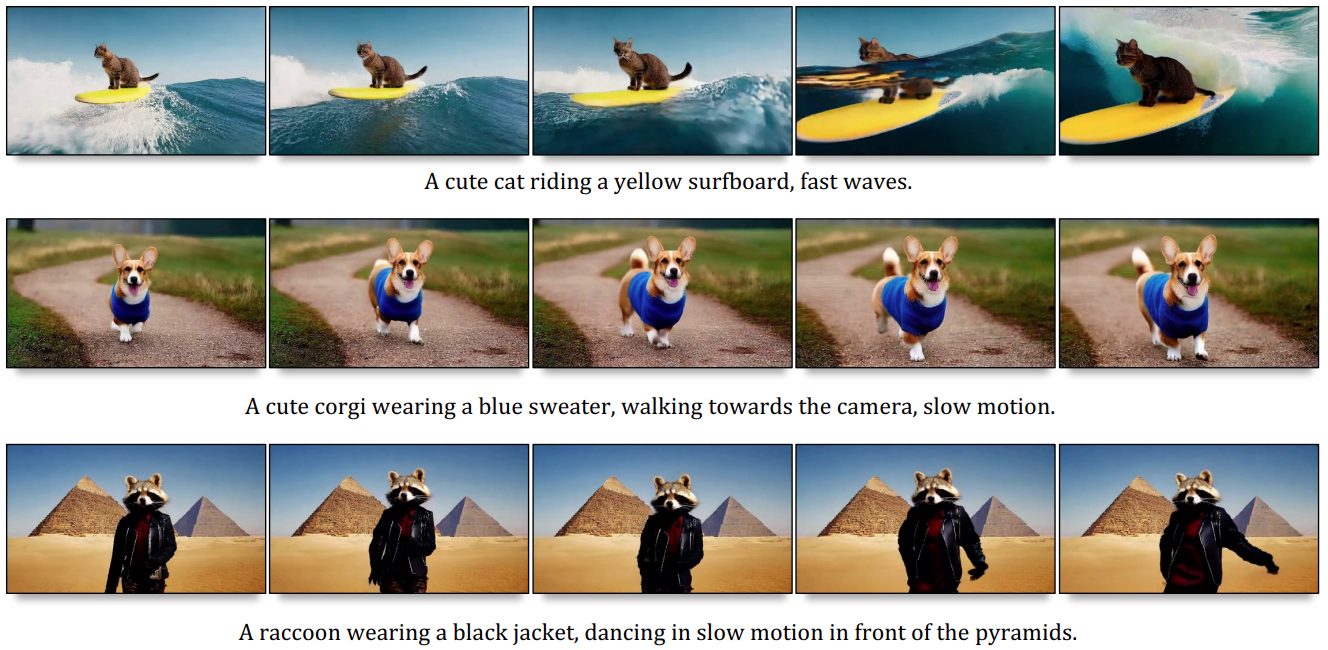

The results are astonishing: look at the examples published on this page. As you can see, the page offers many examples of videos: by moving the mouse pointer over each of them, you can read the request in natural language which led to the generation of the file. By pressing the button F5 or in any case by reloading the same page, many other examples are offered.

WALT is the new system that creates videos from a textual description: Google is at the forefront

There is behind the “magic”. W.A.L.T. (Window Attention Latent Transformer), an innovative system for generating photorealistic videos based on the use of transformers and a diffusion modeling mechanism.

The diffusion modeling is a technique that can be used to generate images or videos by iteratively sampling from a probability distribution. Diffusion can be viewed as the process of generating pixels or elements of an image or video.

The procedure involves a series of repeated steps (iterative approach): at each iteration, new pixels or elements are added or modified, contributing to the progressive formation of the final image or video. The aforementioned probability distribution guides the sampling process, determining which values are most likely or least likely for possible selection. A sampling carried out in this way allows you to introduce randomness and variations, helping to make the generated content more realistic and interesting.

According to Google researchers and other collaborators who participated in the project, WALT guarantees performance first level with various benchmarks related to the generation of videos (UCF-101 and Kinetics-600) and images (ImageNet).

The training of three cascade models for generating videos from text allows you to obtain exciting results. We start from a “Base Latent Video Diffusion” which leverages “latent information” or fundamental characteristics and key aspects of the video to be generated.

The system uses two models in sequence “Video Super-Resolution Diffusion“, specially designed to upscale video images and improve resolution of the sequence produced. In this case up to 512 x 896 pixel. The three models used in cascade enable the so-called “text-to-video generation” by translating the texts into one-by-one video sequences speed of 8 frames per second.