Snowflake helps every company manage and extract value from their data thanks to Data Cloud. Customers use the Data Cloud Snowflake to do business intelligence on data that was once stored in separate “containers”, which could not be correlated with each other. Thanks to the solutions proposed by Snowflake, there is the possibility for any enterprise to explore and share data in complete security, enhance data-driven applications, run different workloads analyses, artificial intelligence (AI) and machine learning. Wherever your data or users are, Snowflake delivers a unique data experience that spans multiple clouds and geographies.

What’s new in Snowflake Arctic: a truly open and unrestricted AI model

Today a wide range of products is available Large Language Model (LLM): there are ones for all needs and all sizes. Snowflake Arctic it is the new proposal with which the American company aims to distinguish itself from the solutions already on the market by offering itself directly to businesses.

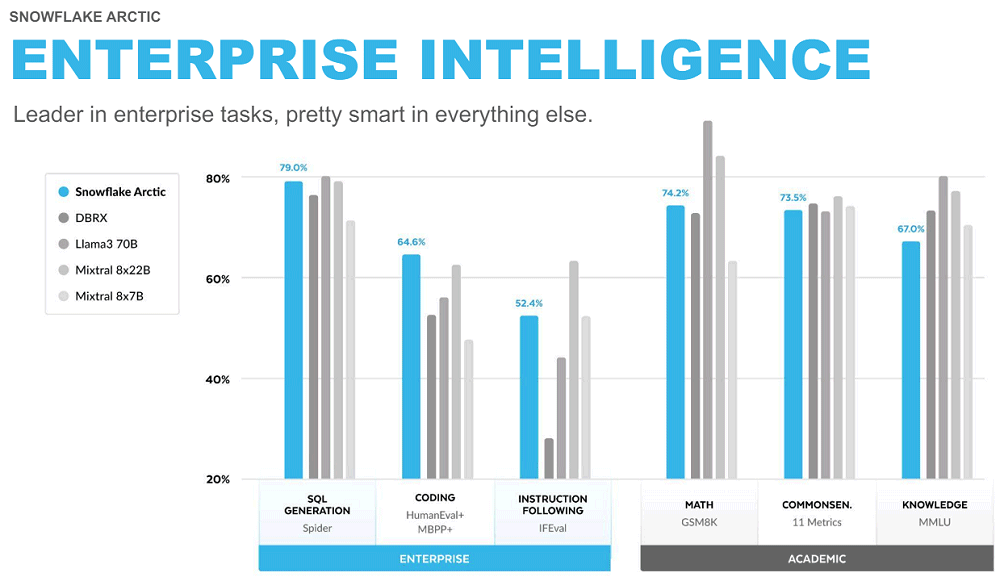

Optimized to handle complex workloads, Arctic excels, for example, in the generation of SQL code, in the execution of instructions and stands out for the recovery of information from knowledge base corporate.

All with a open approach: Snowflake underlines that its Arctic model ensures intelligence ed efficiency Without precedents. And for the first time in the case of a distinctly enterprise-oriented model, users have access to the “weights” (as well as the details of the research developed for the training phase) under the Apache 2.0 license.

Snowflake Arctic is therefore a truly open powerful model, which allows – thanks to the license conditions – personal, research and commercial use without restrictions.

I code templates shared by Snowflake engineers also ensure that users can quickly start deploying and customizing Arctic using their favorite frameworks. These include NVIDIA NIM with NVIDIA TensorRT-LLM, vLLM and Hugging Face. For immediate use, Arctic is available forserverless inference in Snowflake Cortex, the fully managed service that offers solutions machine learning e IA in the Data Cloud.

The new model will also be available on Amazon Web Services (AWS), along with other models and catalogs, including Hugging Face, Lamini, Microsoft Azure, NVIDIA API catalog, Perplexity, Together AI and others still.

A new paradigm for training and optimizing generative models

Arctic leans into the pattern MoE (Mixture of Experts): for output generation, it relies on a type of neural network architecture that combines multiple “expert” models (or “subnetworks”) to manage different aspects of a complex task. Each expert specializes in a certain part of the problem: the various sub-models (the “experts”) cooperate to improve overall performance as well as language comprehension and generation capabilities.

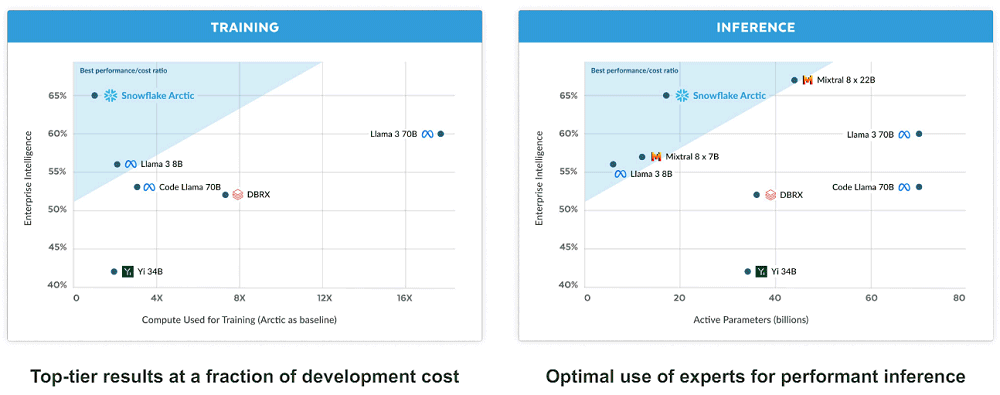

The Snowflake research team, made up of some of the best researchers and systems engineers in the industry, spent less than three months creating Arctic and spent circa one eighth of the training cost. The overseas company therefore establishes a new standard towards which to look, especially in terms of efficiency and savings.

By activating 17 of the 480 billion parameters at a time, Arctic can ensure unprecedented token management efficiency. The model uses approximately 50% fewer parameters than DBRX and 75% fewer parameters than Call 3 70B during inference or training. Furthermore, it outperforms leading open models, including DBRX, Mixtral-8x7B and others, in coding (HumanEval+, MBPP+) and SQL generation (Spider), while providing excellent performance in general language understanding (MMLU).

And they are really important results if you consider that around 46% of corporate decision makers declared they exploit the LLM open source existing to benefit from generative AI, as an integral part of your organization’s strategy (source: Forrester Research).

Extract value from business data with Snowflake

In one of the slides shown during the Arctic presentation, Snowflake managers wrote in large letters: “there is no AI strategy without a data strategy” that is, it is not possible to develop an effective strategy for artificial intelligence without first having one strategy well defined for the management and thedata usage. AI draws on availability and data quality to learn, improve and make relevant decisions. Without quality data and a strategic approach to its collection, cleaning, storage and analysis, any effort in implementing AI risks proving inefficient, ineffective or even counterproductive.

Snowflake provides businesses with the tools to create powerful apps of artificial intelligence and machine learning starting from their data. Arctic accelerates customers’ ability to build AI apps at scale, within the security and governance perimeter of the Data Cloud.

Snowflake’s proposal aims to be both powerful but also economical to combine proprietary data (the immense “endowment” of information produced or collected by the company over years of activity) with a first level LLM. Thus, it is possible for example to unblock a service Retrieval Augmented Generation (RAG) or semantic search, tailored to the specific needs of each business reality.

Thanks also to the access to other LLMs, through yours Data Cloud, Snowflake offers a secure combination of infrastructure and computing power that maximizes the potential of AI for manufacturing purposes. The company has expanded its collaboration with NVIDIA and recently announced investments in Landing AI, Mistral AI, Reka to help customers create value give them corporate data con to LLM by IA.

The images in the article are taken from the Snowflake Arctic presentation. The opening image is taken from the Life at Snowflake page.